Xen的虚拟机的实时迁移简介

Xen为我们提供了一种强大的功能,它就是动态迁移法。它能让Domain在运行期间,以最小的服务中断为代价,将Domain迁移到另外的Xen 服务器上。

使用Xen动态迁移的主要优点列举如下:

1.Xen的动态迁移随同诸如heartbeat之类的高可用性解决方案一起使用,能给我们带来一个“永不抛锚”的系统。最新版本的Enterprise SUSE Linux Server 和Red Hat EnterpriseLinux 也是利用Xen来提供各种高可用性解决方案的。您可以轻而易举的满足各种服务的苛刻要求,同时还能保证所有关键商业服务不会出现中断。

2.它使我们能够以“治未病”方式来维护寄放虚拟机的物理服务器。您可以监视服务器,然后通过转移系统来即时解决潜在的和可疑的问题。

3.它使得在多个服务器之间实现负载均衡成为可能,使我们能够更好地利用企业中的所有计算资源,使其利用情况达到最佳状态。需要注意的是,Xen的开源版本目前还不支持在dom0上感应到故障时自动进行动态迁移的功能。

4.它使得在需要时向系统配置添加计算能力变得更加轻松。

5.您可以根据需要更换硬件,而无需中断运行在该硬件上的服务。

只知道动态迁移的好处还不够,现在就实现Xen的动态实时迁移。

实验介绍:

1.存在一台iSCSI共享存储,iSCSI存储被两台Xen虚拟化平台使用;

2.实验环境存在两台Xen的虚拟化平台,其中一个虚拟化平台上存在一个简单的busybox虚拟机,其映像文件存放在iSCSI共享存储上;这里我在两个虚拟化平台都做了简单的busybox虚拟机;

3.在Xen虚拟化平台间实现实时迁移其中一台busybox虚拟机实例;

实验架构图:

实验实现:

一.构建iSCSI共享存储

1.iSCSI服务器构建

格式化磁盘: # echo -n -e"n\np\n3\n\n+5G\nt\n3\n8e\nw\n" |fdisk /dev/sda # partx -a /dev/sda # fdisk -l /dev/sda3 Disk /dev/sda3: 5378 MB, 5378310144 bytes 255 heads, 63 sectors/track, 653 cylinders Units = cylinders of 16065 * 512 = 8225280bytes Sector size (logical/physical): 512 bytes /512 bytes I/O size (minimum/optimal): 512 bytes / 512bytes Disk identifier: 0x00000000

安装iSCSI服务器端软件:

# yum install -y scsi-target-utils

编辑iSCSI服务器的配置文件:

# vim /etc/tgt/targets.conf

#添加如下内容;

<target iqn.2015-02.com.stu31:t1>

backing-store /dev/sda3

initiator-address 172.16.31.0/24

</target>

配置完成后即可启动iscsi服务器:

# service tgtd start

查看共享设备:

# tgtadm --lld iscsi -m target -o show

Target 1: iqn.2015-02.com.stu31:t1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 5378 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store type: rdwr

Backing store path: /dev/sda3

Backing store flags:

Account information:

ACL information:

172.16.31.0/24

2.iSCSI客户端的安装和配置

两个Xen虚拟化平台节点安装iSCSI客户端软件:

#yum install -y iscsi-initiator-utils

启动iSCSI客户端:

# service iscsi start # service iscsid start

让客户端发现iSCSI服务器共享的存储:

# iscsiadm -m discovery -t st -p172.16.31.3 172.16.31.3:3260,1 iqn.2015-02.com.stu31:t1

注册iscsi共享设备,节点登录,

# iscsiadm -m node -Tiqn.2015-02.com.stu31:t1 -p 172.16.31.3 -l Logging in to [iface: default, target:iqn.2015-02.com.stu31:t1, portal: 172.16.31.3,3260] (multiple) Login to [iface: default, target:iqn.2015-02.com.stu31:t1, portal: 172.16.31.3,3260] successful.

查看iSCSI存储:

# fdisk -l /dev/sdb Disk /dev/sdb: 5378 MB, 5378310144 bytes 166 heads, 62 sectors/track, 1020 cylinders Units = cylinders of 10292 * 512 = 5269504bytes Sector size (logical/physical): 512 bytes /512 bytes I/O size (minimum/optimal): 512 bytes / 512bytes Disk identifier: 0x00000000

登录后就可以格式化磁盘和分区了:

# echo -e "n\np\n1\n\n+2G\nw\n"|fdisk /dev/sdb # partx -a /dev/sdb 查看格式化后的磁盘: # fdisk -l /dev/sdb Disk /dev/sdb: 5378 MB, 5378310144 bytes 166 heads, 62 sectors/track, 1020 cylinders Units = cylinders of 10292 * 512 = 5269504bytes Sector size (logical/physical): 512 bytes /512 bytes I/O size (minimum/optimal): 512 bytes / 512bytes Disk identifier: 0x8e1d9dd0 Device Boot Start End Blocks Id System /dev/sdb1 1 409 2104683 83 Linux

二.客户端节点Xen虚拟化环境构建

由于是两个Xen虚拟化节点,我这里已经有一个现成的ok的Xen虚拟化节点,我们再添加一个虚拟化节点,当作事例提供;

1.配置xen的yum源

# vim /etc/yum.repos.d/xen4.repo [xen4] name=Xen4 For CentOS6 baseurl=ftp://172.16.0.1/pub/Sources/6.x86_64/xen4centos/x86_64/ gpgcheck=0 清空现有yum库缓存: # yum clean all

2.安装xen-4.2.5版本的软件,更新内核版本到3.7.10

# yum install -y xen-4.2.5 xen-libs-4.2.5xen-runtime-4.2.5 kernel-xen

3.配置grub.conf配置文件

# vim /etc/grub.conf

default=0

timeout=5

splashimage=(hd0,0)/grub/splash.xpm.gz

hiddenmenu

title CentOS (3.7.10-1.el6xen.x86_64)

root (hd0,0)

kernel /xen.gz dom0_mem=1024M,max:1024M dom0_max_vcpus=1 dom0_vcpus_pin

cpufreq=xen

module /vmlinuz-3.7.10-1.el6xen.x86_64 ro root=/dev/mapper/vg0-rootrd_NO_LUKS rd_NO_DM.UTF-8 rd_LVM_LV=vg0/swap rd_NO_MDSYSFONT=latarcyrheb-sun16 crashkernel=auto rd_LVM_LV=vg0/root KEYBOARDTYPE=pc KEYTABLE=us rhgbcrashkernel=auto quiet rhgb quiet

module /initramfs-3.7.10-1.el6xen.x86_64.img

title CentOS 6 (2.6.32-504.el6.x86_64)

root (hd0,0)

kernel /vmlinuz-2.6.32-504.el6.x86_64 ro root=/dev/mapper/vg0-rootrd_NO_LUKS rd_NO_DM.UTF-8 rd_LVM_LV=vg0/swap rd_NO_MDSYSFONT=latarcyrheb-sun16 crashkernel=auto rd_LVM_LV=vg0/root KEYBOARDTYPE=pc KEYTABLE=us rhgbcrashkernel=auto quiet rhgb quiet

initrd /initramfs-2.6.32-504.el6.x86_64.img

配置完成后重新启动Linux系统,启动完成后自动进入了Xen的Dom0环境:

查看内核版本,已经升级到3.7.10:

# uname -r

3.7.10-1.el6xen.x86_64

查看xen的xend服务开机启动项:

# chkconfig --list xend

xend 0:off 1:off 2:off 3:on 4:on 5:on 6:off

4.启动xend服务

# service xend start

可以查看一下现在运行的虚拟机:

# xm list Name ID Mem VCPUs State Time(s) Domain-0 0 1024 1 r----- 23.7

查看一下Xen虚拟机的信息:

# xm info host : test2.stu31.com release : 3.7.10-1.el6xen.x86_64 version : #1 SMP Thu Feb 5 12:56:19 CST2015 machine : x86_64 nr_cpus : 1 nr_nodes : 1 cores_per_socket : 1 threads_per_core :1 cpu_mhz : 2272 hw_caps :078bfbff:28100800:00000000:00000140:00000209:00000000:00000001:00000000 virt_caps : total_memory : 2047 free_memory : 998 free_cpus : 0 xen_major : 4 xen_minor : 2 xen_extra : .5-38.el6 xen_caps : xen-3.0-x86_64 xen-3.0-x86_32p xen_scheduler : credit xen_pagesize : 4096 platform_params : virt_start=0xffff800000000000 xen_changeset : unavailable xen_commandline : dom0_mem=1024M,max:1024Mdom0_max_vcpus=1 dom0_vcpus_pin cpufreq=xen cc_compiler : gcc (GCC) 4.4.7 20120313 (Red Hat4.4.7-11) cc_compile_by : mockbuild cc_compile_domain :centos.org cc_compile_date : Tue Jan 6 12:04:11 CST 2015 xend_config_format : 4

iSCSI客户端配置发现iSCSI服务器的共享存储依据上面的配置即可(配置略);

三.在Xen虚拟化环境上构建虚拟机busybox

只需要在其中一个节点构建即可;

1.将iSCSI共享存储作为虚拟机的磁盘存放路径

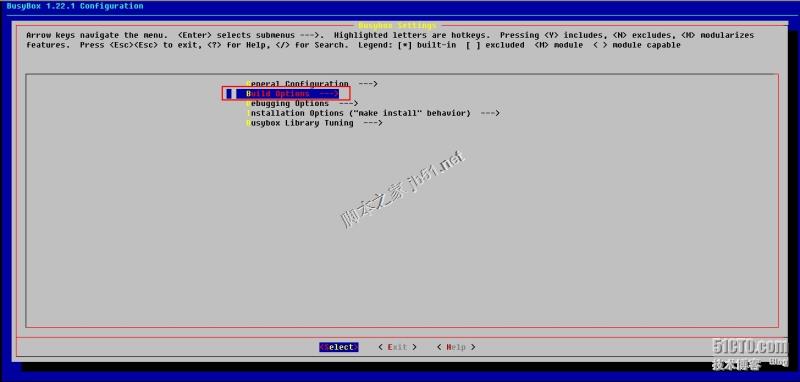

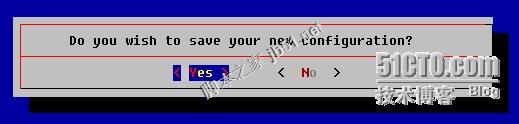

格式化共享存储: # mke2fs -t ext4 /dev/sdb1 创建目录,将存储挂载上: # mkdir /scsistore # mount /dev/sdb1 /scsistore/ 进入目录创建虚拟机磁盘设备: # cd /scsistore # dd if=/dev/zero of=./busybox.img bs=1Moflag=direct seek=1023 count=1 查看设备大小,可以发现实际大小才1MB: # ll -h total 1.1M -rw-r--r-- 1 root root 1.0G Feb 6 20:17 busybox.img drwx------ 2 root root 16K Feb 6 20:05 lost+found 格式化虚拟磁盘设备: # mke2fs -t ext4 /scsistore/busybox.img mke2fs 1.41.12 (17-May-2010) /scsistore/busybox.img is not a blockspecial device. Proceed anyway"htmlcode">编译环境需要的开发包组安装: # yum groupinstall -y Developmenttools # yum install -y ncurses-devel glibc-static 获取busybox软件: busybox-1.22.1.tar.bz2 编译安装busybox: # tar xf busybox-1.22.1.tar.bz2 # cd busybox-1.22.1 # make menuconfig配置如下图所示:

设置完成后进行编译和安装:

# make && make install编译安装busybox完成后在这个目录会生成安装好的文件_install目录,我们将_install目录拷贝到挂载的虚拟磁盘中:

# cp -a _install/* /mnt # cd /mnt # ls bin linuxrc lost+found sbin usr # rm -rf linuxrc # mkdir dev proc sys lib/modules etc/rc.dboot mnt media opt misc -pv至此,虚拟磁盘就构建完成了。

3.虚拟化平台节点桥设备构建

两个节点都需要配置;

1).test1节点:添加网卡桥设备文件: # cd /etc/sysconfig/network-scripts/ # cp ifcfg-eth0 ifcfg-xenbr0 配置桥接设备: # vim ifcfg-xenbr0 DEVICE="xenbr0" BOOTPROTO="static" NM_CONTROLLED="no" ONBOOT="yes" TYPE="Bridge" IPADDR=172.16.31.1 NETMASK=255.255.0.0 GATEWAY=172.16.0.1 配置网卡设备: # vim ifcfg-eth0 DEVICE="eth0" BOOTPROTO="static" HWADDR="08:00:27:16:D9:AA" NM_CONTROLLED="no" ONBOOT="yes" BRIDGE="xenbr0" TYPE="Ethernet" USERCTL="no"2).test2节点的配置:

添加网卡桥设备文件: # cd /etc/sysconfig/network-scripts/ # cp ifcfg-eth0 ifcfg-xenbr0 配置桥接设备: # vim ifcfg-xenbr0 DEVICE="xenbr0" BOOTPROTO="static" NM_CONTROLLED="no" ONBOOT="yes" TYPE="Bridge" IPADDR=172.16.31.2 NETMASK=255.255.0.0 GATEWAY=172.16.0.1 配置网卡设备: # vim ifcfg-eth0 DEVICE="eth0" BOOTPROTO="static" HWADDR="08:00:27:6A:9D:57" NM_CONTROLLED="no" ONBOOT="yes" BRIDGE="xenbr0" TYPE="Ethernet" USERCTL="no"3).配置桥接模式需要将NetworkManager服务关闭:

两个节点都需要操作 #chkconfig NetworkManager off #service NetworkManager stop 配置完成后重启网络服务: #service network restart4).登录终端查看桥接状态:

# ifconfig eth0 Link encap:Ethernet HWaddr08:00:27:16:D9:AA inet6 addr: fe80::a00:27ff:fe16:d9aa/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:37217 errors:0 dropped:7 overruns:0 frame:0 TX packets:4541 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:7641467 (7.2 MiB) TXbytes:773075 (754.9 KiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:12 errors:0 dropped:0 overruns:0 frame:0 TX packets:12 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:1032 (1.0 KiB) TXbytes:1032 (1.0 KiB) xenbr0 Link encap:Ethernet HWaddr08:00:27:16:D9:AA inet addr:172.16.31.1 Bcast:172.16.255.255 Mask:255.255.0.0 inet6 addr: fe80::a00:27ff:fe16:d9aa/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:1211 errors:0 dropped:0 overruns:0 frame:0 TX packets:90 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:116868 (114.1 KiB) TXbytes:15418 (15.0 KiB)4.构建busybox虚拟机

创建虚拟机配置文件 # vim /etc/xen/busybox kernel ="/boot/vmlinuz-2.6.32-504.el6.x86_64" ramdisk ="/boot/initramfs-2.6.32-504.el6.x86_64.img" name = "busybox" memory = "512" vcpus = 1 disk =['file:/scsistore/busybox.img,xvda,w',] root = "/dev/xvda ro" extra = "selinux=0 init=/bin/sh" vif = ['bridge=xenbr0',] on_crash = "destroy" on_reboot = "destroy" on_shutdown = "destroy"将配置文件复制一份到节点test2上:

# scp /etc/xen/busybox root@172.16.31.2:/etc/xen/

如果希望busybox这个用户空间能够设置网卡,我们还需要加载xen-netfront.ko进入虚拟机磁盘的指定目录;

我们将Dom0中的xen-netfront.ko模块复制进虚拟机磁盘的lib/modules/目录中:

# cd /lib/modules/2.6.32-504.el6.x86_64/kernel/drivers/net/需要查看模块依赖关系:

# modinfo xen-netfront.ko filename: xen-netfront.ko alias: xennet alias: xen:vif license: GPL description: Xen virtual network device frontend srcversion: 5C6FC78BC365D9AF8135201 depends: vermagic: 2.6.32-504.el6.x86_64 SMP mod_unloadmodversions可以发现无依赖关系,我们可以直接使用:

# cp xen-netfront.ko /mnt/lib/modules/

复制完成后卸载虚拟机磁盘:

# umount /mnt

至此,我们的虚拟机busybox就创建完毕!

5.我将卸载scsistore,到另外的Xen虚拟化平台挂载查看测试:

在test1节点卸载:

[root@test1 xen]# umount /scsistore/

在test2节点上发现iSCSI共享存储:[root@test2 ~]# iscsiadm -m discovery -t st-p 172.16.31.3 Starting iscsid: [ OK ] 172.16.31.3:3260,1 iqn.2015-02.com.stu31:t1注册iscsi共享设备,节点登录,

[root@test2 ~]# iscsiadm -m node -Tiqn.2015-02.com.stu31:t1 -p 172.16.31.3 -l [root@test2 ~]# fdisk -l /dev/sdb Disk /dev/sdb: 5378 MB, 5378310144 bytes 166 heads, 62 sectors/track, 1020 cylinders Units = cylinders of 10292 * 512 = 5269504bytes Sector size (logical/physical): 512 bytes /512 bytes I/O size (minimum/optimal): 512 bytes / 512bytes Disk identifier: 0x8e1d9dd0 Device Boot Start End Blocks Id System /dev/sdb1 1 409 2104683 83 Linux挂载磁盘后查看磁盘中的内容:

[root@test2 ~]# mkdir /scsistore [root@test2 ~]# mount /dev/sdb1 /scsistore/ [root@test2 ~]# ls /scsistore/ busybox.img lost+found可以发现文件是共享的,我们的iSCSI共享存储是正常的。

四.两个虚拟化平台的虚拟机实时迁移测试

1.两个虚拟化节点挂载共享存储test1节点: [root@test1 ~]# mount /dev/sdb1 /scsistore/ [root@test1 ~]# ls /scsistore/ busybox.img lost+found test2节点: [root@test2 ~]# mount /dev/sdb1 /scsistore/ [root@test2 ~]# ls /scsistore/ busybox.img lost+found2.启动虚拟机busybox

test1节点启动:[root@test1 ~]# xm create -c busybox Using config file"/etc/xen/busybox". Started domain busybox (id=13) Initializingcgroup subsys cpuset Initializing cgroup subsys cpu Linux version 2.6.32-504.el6.x86_64(mockbuild@c6b9.bsys.dev.centos.org) (gcc version 4.4.7 20120313 (Red Hat4.4.7-11) (GCC) ) #1 SMP Wed Oct 15 04:27:16 UTC 2014 Command line: root=/dev/xvda ro selinux=0init=/bin/sh #信息略...;加载网卡模块; / # insmod /lib/modules/xen-netfront.ko Initialising Xen virtual ethernet driver. #设置网卡ip地址; / # ifconfig eth0 172.16.31.4 up / # ifconfig eth0 Link encap:Ethernet HWaddr00:16:3E:49:E8:18 inet addr:172.16.31.4 Bcast:172.16.255.255 Mask:255.255.0.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:42 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:2942 (2.8 KiB) TXbytes:0 (0.0 B) Interrupt:247test2节点启动:

[root@test2 ~]# xm create -c busybox Using config file"/etc/xen/busybox". Started domain busybox (id=2) Initializingcgroup subsys cpuset Initializing cgroup subsys cpu Linux version 2.6.32-504.el6.x86_64(mockbuild@c6b9.bsys.dev.centos.org) (gcc version 4.4.7 20120313 (Red Hat4.4.7-11) (GCC) ) #1 SMP Wed Oct 15 04:27:16 UTC 2014 Command line: root=/dev/xvda ro selinux=0init=/bin/sh #信息略... EXT4-fs (xvda): mounted filesystem withordered data mode. Opts: dracut: Mounted root filesystem /dev/xvda dracut: Switching root /bin/sh: can't access tty; job controlturned off / # ifconfig / # insmod /lib/modules/xen-netfront.ko Initialising Xen virtual ethernet driver. / # ifconfig eth0 172.16.31.5 up / # ifconfig eth0 Link encap:Ethernet HWaddr00:16:3E:41:B0:32 inet addr:172.16.31.5 Bcast:172.16.255.255 Mask:255.255.0.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:57 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:3412 (3.3 KiB) TXbytes:0 (0.0 B) Interrupt:247技巧:按CTRL+]退出虚拟机

这里我只是为了测试两个虚拟化平台都能运行虚拟机,所以都建立了busybox这个虚拟机,但是我们迁移的时候只需要一台busybox,我们在test1节点上将busybox迁移到test2节点。

我们先将所有的虚拟机都关闭:

#xm destory busybox

3.配置节点实时迁移

test节点的配置:[root@test1 ~]# grep xend/etc/xen/xend-config.sxp |grep -v "#" (xend-http-server yes) (xend-unix-server yes) (xend-relocation-server yes) (xend-relocation-port 8002) (xend-relocation-address '172.16.31.1') (xend-relocation-hosts-allow '')test2节点的配置:

[root@test2 ~]# grep xend/etc/xen/xend-config.sxp |grep -v '#' (xend-http-server yes) (xend-unix-server yes) (xend-relocation-server yes) (xend-relocation-port 8002) (xend-relocation-address '172.16.31.2') (xend-relocation-hosts-allow '')两个虚拟化节点重启xend服务:

# service xend restart Stopping xend daemon: [ OK ] Starting xend daemon: [ OK ]查看监听端口:

# ss -tunl |grep 8002 tcp LISTEN 0 5 172.16.31.2:8002 *:*启动节点test1的busybox:

#xm create -c busybox #信息略... #设置IP地址,以便一会儿迁移后查看有依据; / # insmod /lib/modules/xen-netfront.ko Initialising Xen virtual ethernet driver. / # ifconfig eth0 172.16.31.4 up / # ifconfig eth0 Link encap:Ethernet HWaddr00:16:3E:28:BB:F6 inet addr:172.16.31.4 Bcast:172.16.255.255 Mask:255.255.0.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:921 errors:0 dropped:0 overruns:0 frame:0 TX packets:2 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:78367 (76.5 KiB) TXbytes:84 (84.0 B) Interrupt:247将test1节点的虚拟机迁移到test2节点:

[root@test1 ~]# xm migrate -l busybox 172.16.31.2

迁移完成后查看test1节点的虚拟机列表:[root@test1 ~]# xm list Name ID Mem VCPUs State Time(s) Domain-0 0 1023 1 r----- 710.1迁移完成后查看test2节点的虚拟机:

[root@test2 network-scripts]# xm list Name ID Mem VCPUs State Time(s) Domain-0 0 1023 1 r----- 142.8 [root@test2 network-scripts]# xm list Name ID Mem VCPUs State Time(s) Domain-0 0 1023 1 r----- 147.4 busybox 3 512 0 --p--- 0.0连接上test2的虚拟机查看:

[root@test2 ~]# xm console busybox Using NULL legacy PIC Changing capacity of (202, 0) to 2097152sectors Changing capacity of (202, 0) to 2097152sectors / # ifconfig eth0 Link encap:Ethernet HWaddr00:16:3E:1D:38:69 inet addr:172.16.31.4 Bcast:172.16.255.255 Mask:255.255.0.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:350 errors:0 dropped:0 overruns:0 frame:0 TX packets:2 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:27966 (27.3 KiB) TXbytes:84 (84.0 B) Interrupt:247可以发现迁移到test2节点上的虚拟机的地址是test1上的;证明我们的迁移完成了。

至此,我们的Xen虚拟化平台的实时迁移实验就完成了。

Xen,虚拟机,实时迁移

更新日志

- 黄乙玲1988-无稳定的爱心肝乱糟糟[日本东芝1M版][WAV+CUE]

- 群星《我们的歌第六季 第3期》[320K/MP3][70.68MB]

- 群星《我们的歌第六季 第3期》[FLAC/分轨][369.48MB]

- 群星《燃!沙排少女 影视原声带》[320K/MP3][175.61MB]

- 乱斗海盗瞎6胜卡组推荐一览 深暗领域乱斗海盗瞎卡组分享

- 炉石传说乱斗6胜卡组分享一览 深暗领域乱斗6胜卡组代码推荐

- 炉石传说乱斗本周卡组合集 乱斗模式卡组最新推荐

- 佟妍.2015-七窍玲珑心【万马旦】【WAV+CUE】

- 叶振棠陈晓慧.1986-龙的心·俘虏你(2006复黑限量版)【永恒】【WAV+CUE】

- 陈慧琳.1998-爱我不爱(国)【福茂】【WAV+CUE】

- 咪咕快游豪礼放送,百元京东卡、海量欢乐豆就在咪咕咪粉节!

- 双11百吋大屏焕新“热”,海信AI画质电视成最大赢家

- 海信电视E8N Ultra:真正的百吋,不止是大!

- 曾庆瑜1990-曾庆瑜历年精选[派森][WAV+CUE]

- 叶玉卿1999-深情之选[飞图][WAV+CUE]